Building a Rolling Research Program

Role: Lead UX researcher | Client: Major technology platform | Methods: Moderated interviews, iterative prototype concept testing | Timeline: Rolling 3-week cycles across multiple product sub-teams

Overview

A consumer technology company was exploring how new advertising formats could fit into familiar user experiences across search, maps, listings, and business pages. They needed reliable user insight to refine concepts quickly and reduce uncertainty in early product decisions.

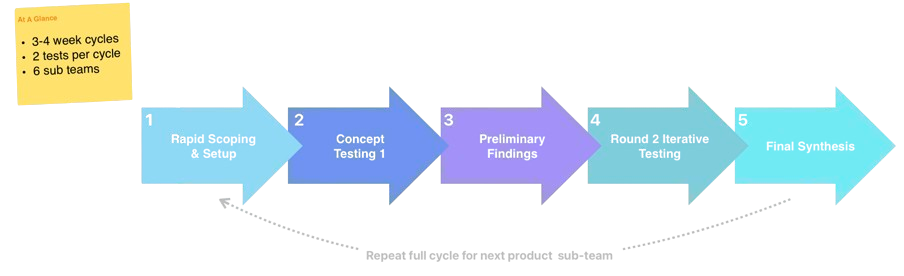

I served as the sole UX researcher in a multi-month rolling program, embedding with one product sub-team at a time for focused three-week cycles of agile research.

This structure created a repeatable research engine that enabled earlier testing, clearer decision-making, and faster iteration in a high-velocity environment.

Project Challenges

High ad-aversion. Users’ hated for ads was palpable. Breaking through surface negativity meant structuring probing to uncover behavioral cues and value drivers.

New vertical every 3-4 weeks. Each cycle brought a new product area and 3–6 prototypes, meaning rapid onboarding, building a flexible template bank were crucial.

Rigor at speed. With tight deadlines, I relied on repeatable processes to maintain consistency across sessions while managing last-minute prototype changes and shifting team priorities.

Research Approach & Methods

Rather than a single study, this program functioned as a repeatable research system, purpose-built for fast-moving teams.

Phase 1 - Rapid Scoping & Setup

Aligned with each sub-team on goals, decision points, and prototype variations.

Created stimulus decks, recruited participants, and quickly ramped up on 3–6 evolving concepts per cycle.

Phase 2 - Concept Testing Round 1

Moderated sessions to evaluate clarity, trust, and relevance, separating ad-aversion from actionable product insight.

Phase 3 - Preliminary Findings

Delivered a short midpoint readout to build early alignment, clarify what to refine, and sharpen hypotheses for Round 2.

Phase 4 - Round 2 Iterative Testing

Tested updated concepts and identified which refinements meaningfully improved user experience.

Phase 5 - Final Synthesis

Consolidated insights across rounds to guide what to refine, pause, or rethink before transitioning to the next sub-team’s cycle.

Key Themes

1. Ad-aversion shaped interpretation

Users’ strong dislike for ads was immediate and consistent. Breaking down why they felt this way and what specific details made ads feel useful and helped uncover pathways that aligned user expectations with monetization needs.

2. Users evaluated new concepts through familiar cues

Users judged ad integrations using the same relevance and credibility signals they rely on when choosing rentals, agents, or service providers. They looked for known details like specialization and trustworthy markers like images and reviews.

3. Iteration pace shaped insight quality

The rapid cadence revealed which refinements meaningfully improved comprehension and trust, while deprioritizing changes that added complexity without user value. New ad formats were interpreted through the structure of existing experiences, especially on business pages and service listings.

What Made This Research Operationally Effective

This program worked because the system behind it was intentionally lightweight, repeatable, and built for speed, using:

Reusable frameworks. A consistent structure for scoping, guides, stimulus decks, and synthesis made each cycle predictable and high-quality.

Early consensus-building. Preliminary findings briefs helped teams refine prototypes quickly and avoid multi-week misalignment.

Flexible, high-velocity collaboration. Tight alignment with designers allowed for rapid prototype updates between rounds.

Signal-over-noise. Separating ad-aversion from meaningful product insight ensured decisions were rooted in user behavior, not gut reactions.

Impact

1. Accelerated Decision-Making in Early Product Development

The 3-4 week cadence gave teams fast, evidence-backed direction on what to refine, what to pause, and what to explore next, shortening their iteration cycles and reducing guesswork and risk.

2. Clearer, More User-Aligned Ad Concepts

Findings guided improvements to included details, credibility markers, layout and hierarchy, and where and how new ad formats appeared in familiar surfaces.

These changes shifted ad concepts from “bothersome” toward “potentially useful.”

3. A Repeatable Research Engine for Multiple Teams

The program established a lightweight, scalable structure that made it easy for new sub-teams to onboard into a ready-made research workflow.

Teams gained earlier testing, clearer decision points, improved cross-functional alignment, and faster iteration windows.

Reflection

This program strengthened my ability to operate at the intersection of research, operations, and agile product development. Switching verticals every few weeks also sharpened my skills in rapid onboarding, high-velocity synthesis, and facilitating consensus in tight timelines.

Last, being embedded into a rolling research program underscored the value of continuous evaluative research, especially in spaces where trust, clarity, and perceived usefulness shape whether an emerging ad experience succeeds or fails.